3D point cloud and depth map reconstruction with a monocular liquid lens optical system

Abstract

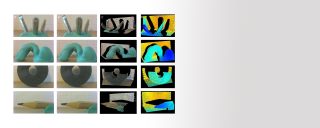

Liquid lenses are variable focus components actuated by specific external control with high repetitive accuracy. If the focusing distance is varied over a range large enough, each object is captured once in focus by the image sensor. Taking advantage of these circumstances and the known optical properties of the liquid lens, an all-in-focus image of the environment can be created, also called as image with Extended Depth of Field (EDoF). Furthermore, it is possible to create a 3D (more precisely a 2.5D) representation of the entire view by using only one camera resp. image sensor.

In this white paper we present a technological approach and implementation that demonstrates the described principle by using Corning® Varioptic® Lenses. The required image and video processing, which includes the algorithmic core, is realized by a GPU implementation within an Embedded System, the NVIDIA® Jetson Nano™. In addition, performance data like image processing rate, spatial resolution, and 3D representation acquisition time are given