Technology inside the data center is changing. But before we spend time discussing how, we need to first start with why. In 2017, nearly a quarter of billion users logged onto the internet for the first time, and the number of users is already up 7 percent year-over-year in 2018. Social media sees 11 new users a second, and the average person is estimated spend at least six hours a day online. Worldwide, there is expected to be an estimated six to seven devices per person globally, and 13 per person inside the US, by 2020. So why is any of that important?

The answer is simple: revenue. Almost all companies have websites that they use to attract and interact with customers, and e-commerce earned nearly $1.5 trillion in 2017. If your website takes more than three seconds to load, you could be losing nearly a quarter of your visitors. Just one second of delay equates to an 11 percent loss of page views and a 7 percent reduction in conversions. A study conducted by Ericsson showed that a few seconds of video buffering can trigger the same increase in anxiety levels as watching a horror movie alone or trying to solve complex math problems. All of this translates to a need for faster connections and greater capacity.

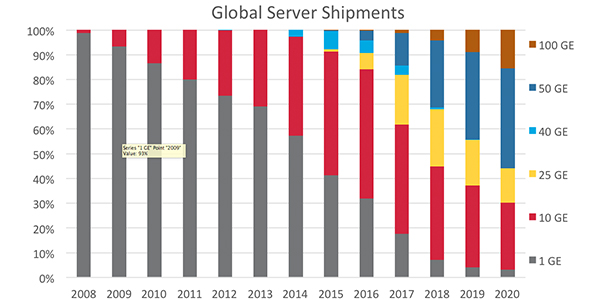

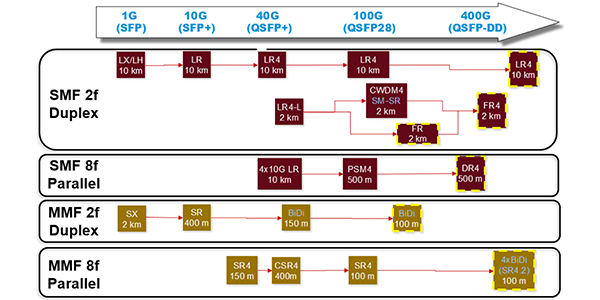

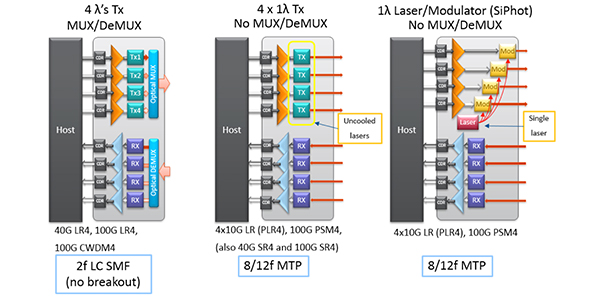

Server activity has increased over the last several years, and this increase is expected to continue. Server speed drives transceiver sales and development. As you can see in Figure 1, 1G connections are quickly becoming relics, and soon 10G will all but disappear as well. 25G transceivers currently have a foothold in the market, but should be eclipsed by 50G over the next few years. Additionally, many hyperscale and cloud data centers are expected to be early adopters of 100G server speeds. These higher server speeds can be supported by either 2-fiber transceivers of equal data rates or parallel optic transceivers of 40, 200, 100, and 400G at the switch utilizing parallel optics and breakout capabilities.